Syft: An NLP-based VR App

This project was created for TartanHacks 2018.

Collaborators: Michael Li, Anish Krishnan, Vashisth Parekh

Prizes: Google's Best Use of Cloud Platform

Github: Here!

Everyone has stage fright, even Bill Gates. You're not alone and we have a solution for you!

Syft lets you practice speeches on virtual stages so that you can overcome stage fright. It even uses Google's Natural Language Processing (NLP) to analyze your speech and give you feedback!

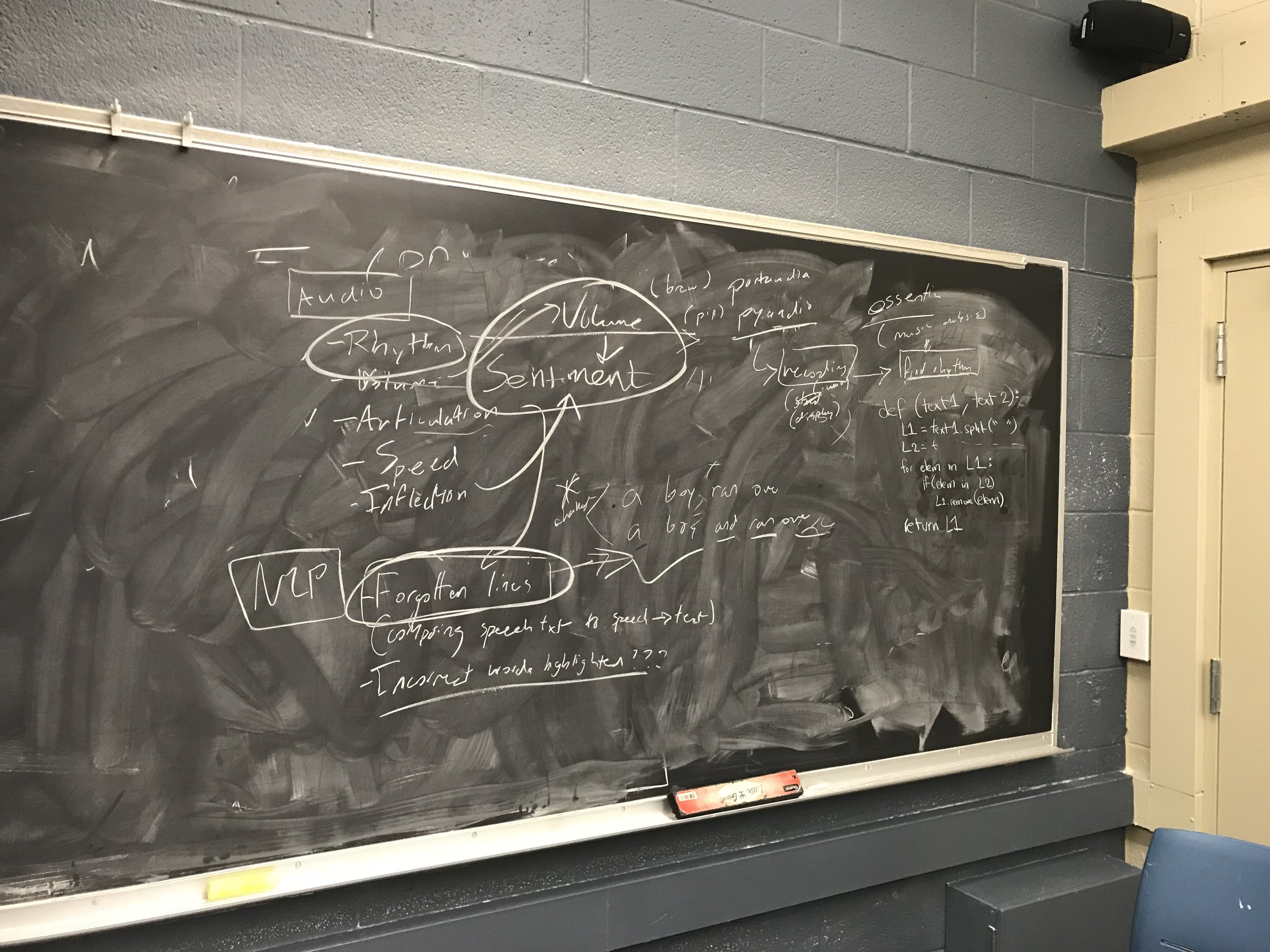

1. Natural Language Processing

Using Microsoft Azure and Bing's Speech to Text API alongside Google's NLP API, Syft is able to create an aggregate of the linguistic data from the user's speech to create a more accurate evaluation of the speaker.

2. Virtual Reality

Syft takes advantage of the power of VR to place the user in a stage with an active crowd, so that the speaker can practice his/her speech in a more accurate environment.

3. Detailed Analytics

Syft analyzes your speech in real time looking specifically for stutters, Rhythm, projection, vigor, and even applies sentiment analysis.

Made with:

- Microsoft Azure

- Google Cloud Platform

- Unity

- Android SDK